Originally published at: https://www.ronenbekerman.com/photo-matching-with-fstorm/

CASE STUDY by Matthew Hallett

Photo Matching

with Fstorm

Photorealism might not be the primary goal for ArchViz anymore (in general, as a goal for the industry), since we pretty much achieved it thanks to technological advances in hardware and software. And yet, from time to time, someone still manages to surprise me with efforts towards realism. Such is this work by Matthew Hallett.

Reading time: 5 min 37 sec

” In this project, I challenged myself not just to render a realistic scene but to create the objects and materials using new techniques as well… ”

Matthew Hallett

Architectural visualization artist – Hallett Visual

Introduction

Having a dedicated post on Ronen’s blog has been my goal since I started architectural rendering back in 2009 while being inspired by Bertrand Benoit’s and Peter Guthrie’s works. In this case, I challenged myself not just to render a realistic scene but to create the objects and materials using new techniques. Thank you for being interested in my project, and thank you to Ronen for posting my work.Below is the set of still image I’ve produced during this project. Photos next to renders.

Table of Contents

Gathering References

Having access to the home, I used my mobile phone to capture as many reference images as possible. Even though it is possible to camera match from a single photo, you can never have too many reference images. That is even more important when modeling fine details. Photos of the exterior were combined and roughly mapped onto simple boxes, placed at a distance to create a matching backplate.

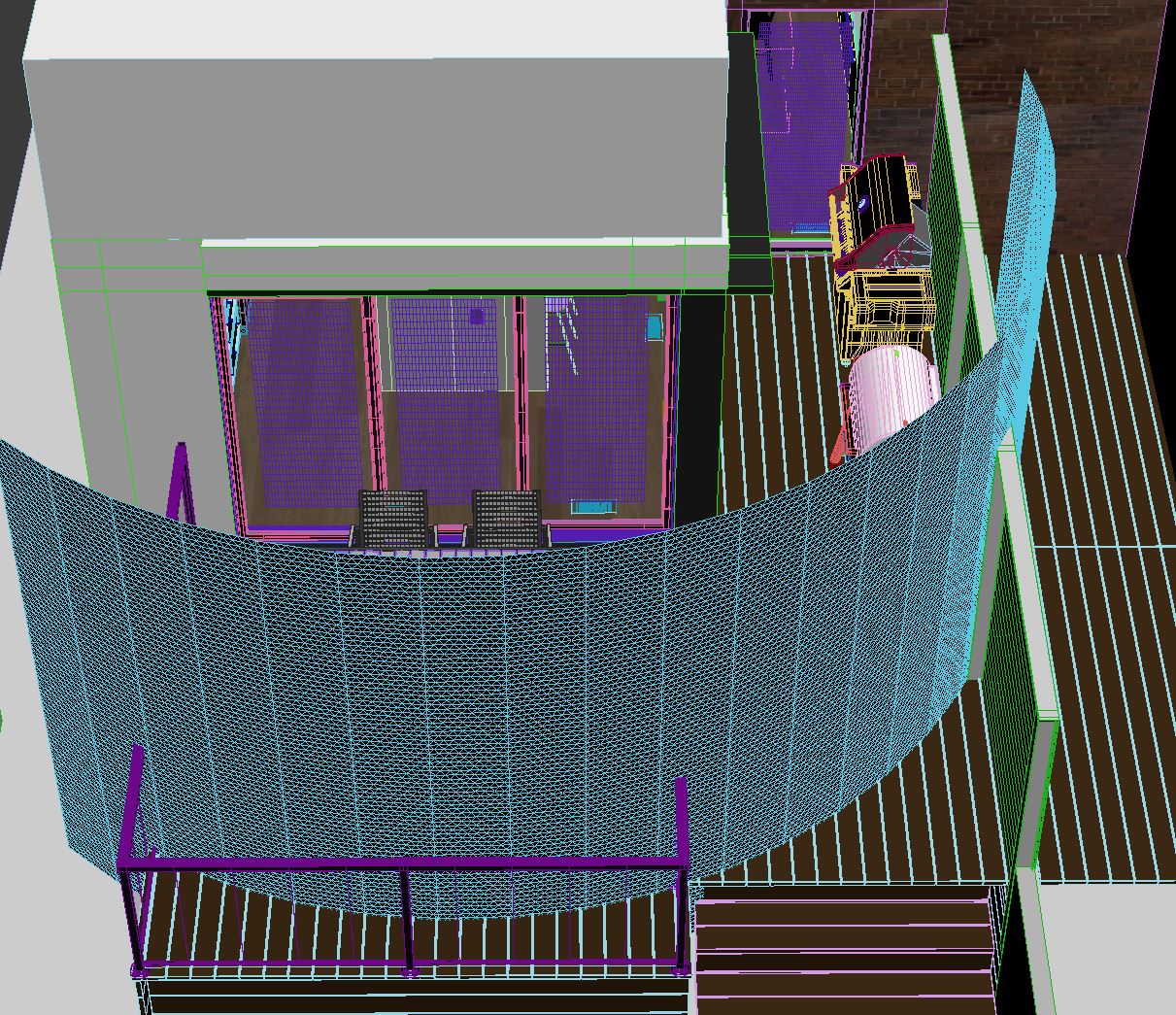

3d Modeling

Every element must be an exact reproduction so it would match the photography. I made measurement notes combined with the reference images starting with the kitchen appliances. Many manufacturers offer free 3D files of their products directly on their websites. 3D Warehouse is another good site for quick free models. Free models often need refinement and detail, but it’s a good starting point.

The Stool Model

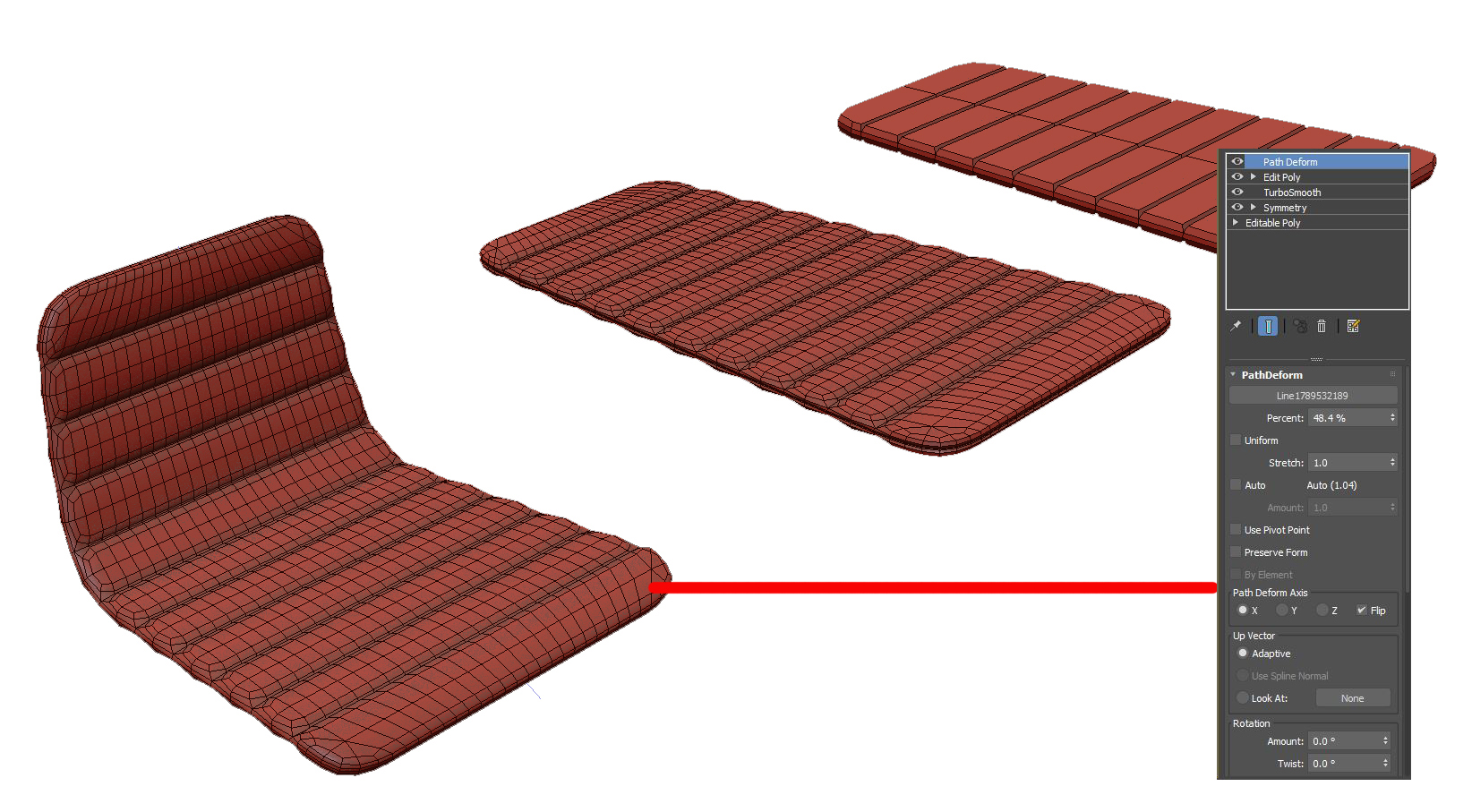

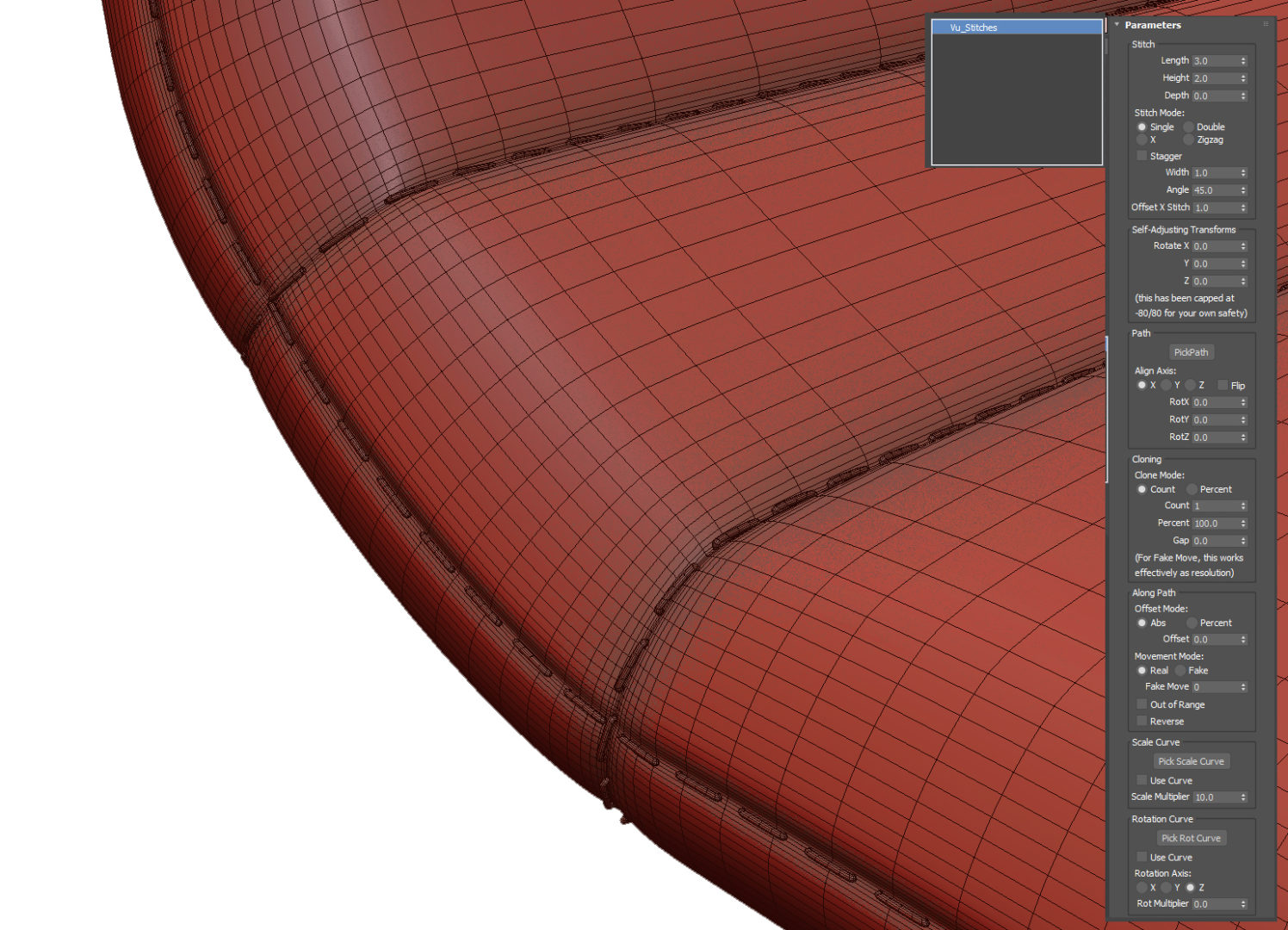

To create the stool, I imagined it as a flat object bent to form the back and seat. I crudely shaped folds and added thickness starting with simple polygons, knowing it would be smoothed with a turbo smooth modifier. We then bend that mesh using a path deform modifier, creating the final shape. Stitches are made from a generated spline of the final mesh and use the MCG Stitches script.

The Tabel Model

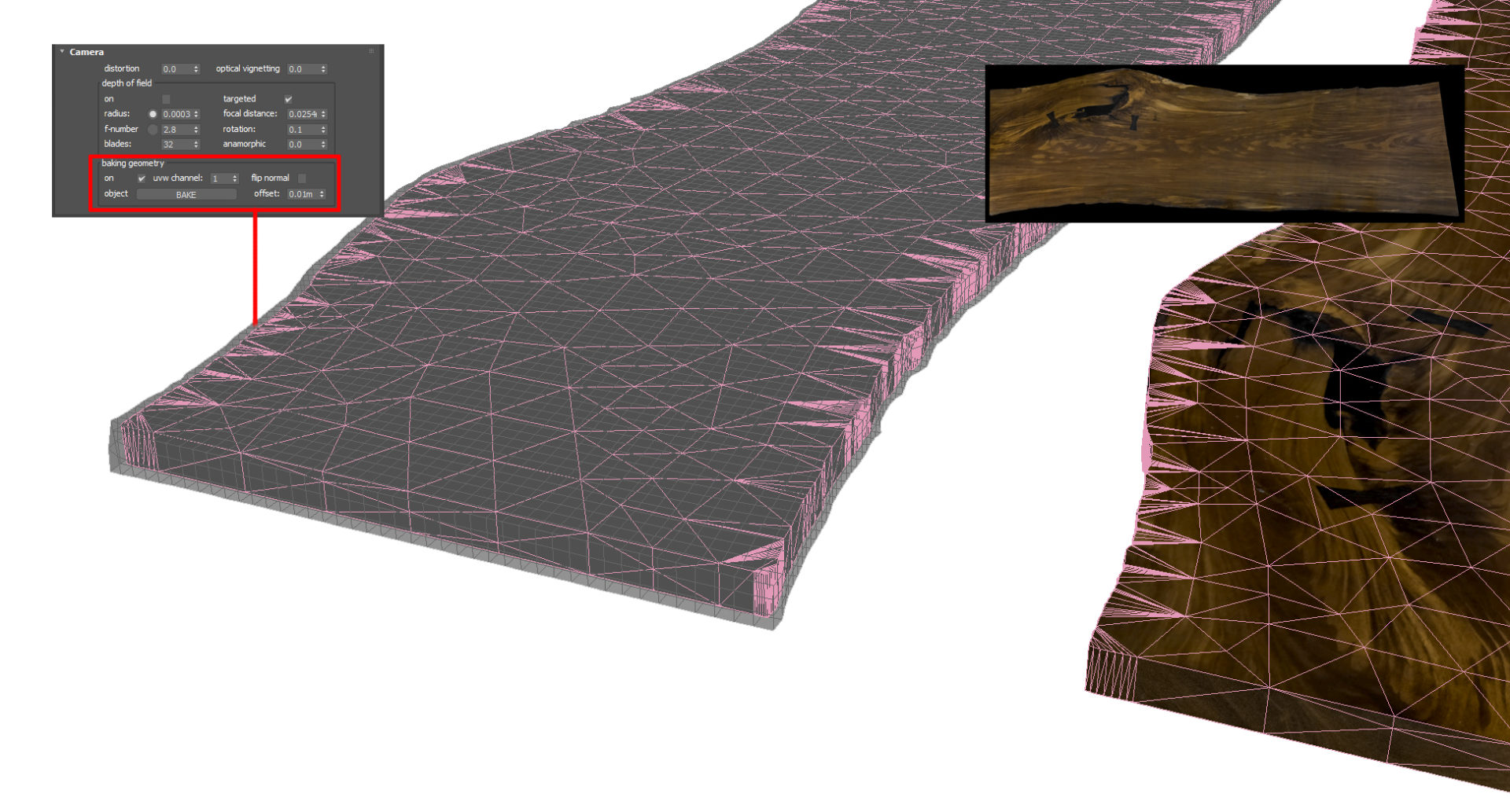

I took a series of photos of the live edge table with the plan to make a mesh using photogrammetry, but I couldn’t generate a usable mesh. Instead, I photo-merged the different surfaces in photoshop and mapped them onto an extruded spline of the table. An easy way to create splines that perfectly match a 2D image is to outline the image with a marque in Photoshop, right-click and select “make work path” then “file > export > paths to illustrator.”

Then, import that .AI file into 3ds max, extrude, and apply a UVW map to the bounding box. You now have a perfect cut-out of your 2D shape.

To generate a clean mesh and a single texture, I copied the extruded spline, added a subdivider modifier, and the retopology modifier (introduced in version 2021).

This mesh will be texture baked from the extruded mesh, so I add a push modifier to make it slightly larger than the extruded mesh.

Now that I have a clean mesh and a single diffuse texture, I generate noise and reflection maps in Photoshop. To add extra detail in very bumpy areas of the table, I tessellated those selected polygons and applied a displacement modifier with a bump map generated from the diffuse.

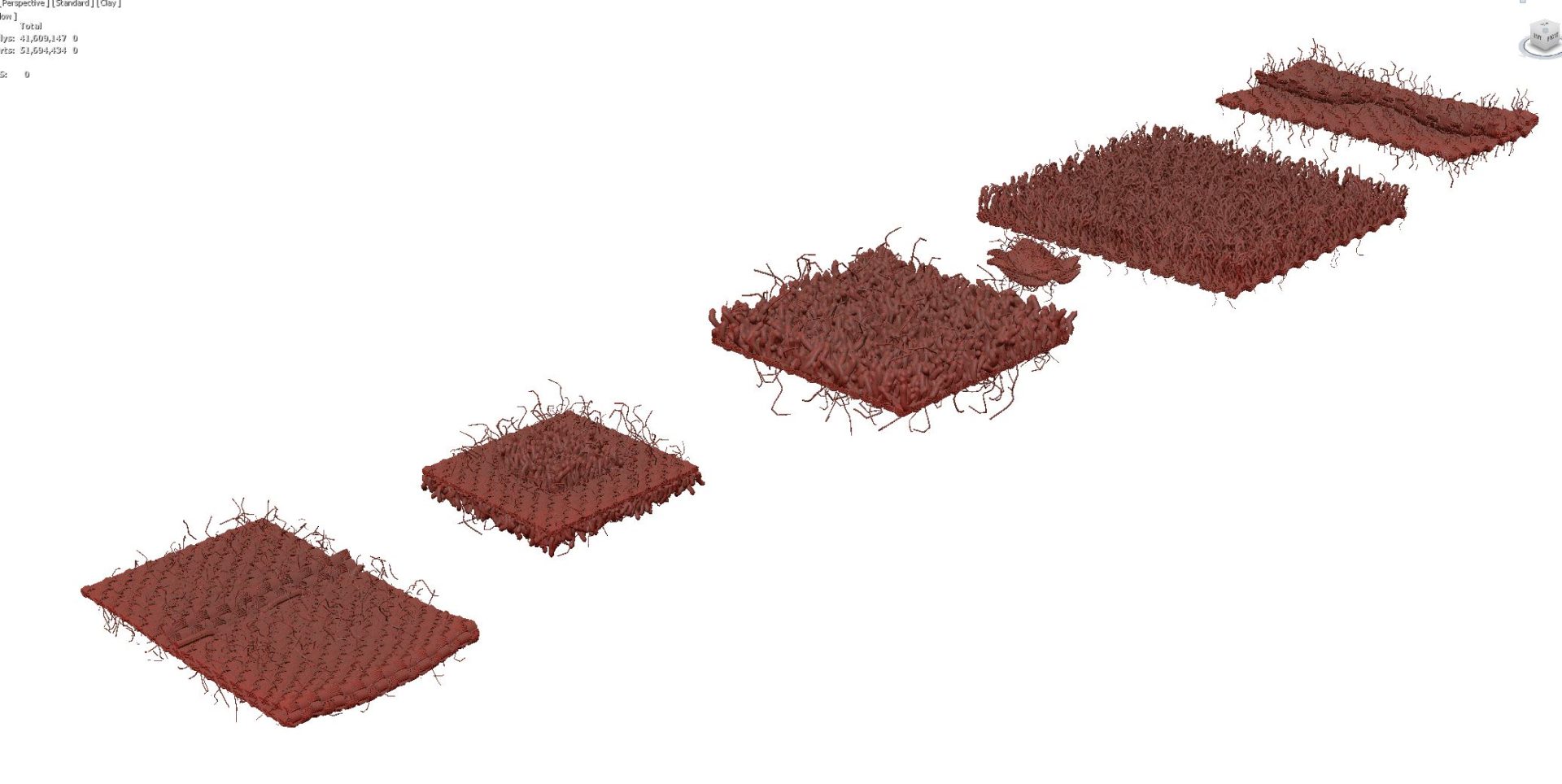

Cloth and Tyflow

The cloth has been simulated with Tyflow. The fine cloth details use a mesh I created to match the towels. It’s a repeating pattern that is scattered using the Fstorm Geo pattern. I love Geo patterns. Its texture mapping, but in 3D. The skull towel was simulated with a simplified proxy plane. The skull towel would break because it contained irregular triangles, so it referenced using the Skin Wrap modifier directly to the Tyflow object.

Textures & Materials

The Water

I used Substance Player and Poliigon’s Wood Floor Generator smart material for the floors. I was surprised how well it matched, considering its procedurally generated. I added some dust and dirt to the reflection layers in photoshop, but it doesn’t really show up in these renderings.

The quartzite countertop is a photo of the slab before it was installed, with highlights and shadows photoshopped, but it is not tileable. I don’t have a camera other than the one on my phone, so it’s denoised and enlarged using Topaz Gigapixel. Natural quartzite is translucent, and while I would love to include that look in the renderings, it wasn’t necessary. It does have a “clear coat” blend Fstorm MixMaterial to provide that extra high polish gloss similar to a car paint shader.

The stainless-steel material was the most challenging for me. I could not get a single material to work across all objects and lighting conditions! I could never settle on the right IOR and anisotropy amount, 11 and 0.65, respectively. It would be best if you placed a gradient map in the anisotropy direction as below. Adjust the rotation to get the highlight specular that matches your object.

Lighting

Most of the interior is illuminated from a free HDRI map I found on NoEmotion.net. Because I was trying to match real-life photos, I needed to recreate the entire exterior as it was the day of shooting and use an HDRI that also matched those conditions. Without an accurate exterior model, the global illumination never quite matched.

Interior photography often uses a soft indirect flash to balance the image, so I recreate this with a soft disk light pointing away behind the camera.

In addition to the flash, there are Fstorm light portals outside all the glazing, and in the case of the large rear patio doors, I found a single-wide extruded arc produced more accurate results than the typical plane coplanar with the glazing.

Since the final photographs are HDR images themselves and are composited from a combination of various light configurations, I discovered an additional light source was needed. Each window has a self-illuminated material that has been carefully tuned to provide a minimal about of white light.

Rendering

Fstorm render settings are straightforward. There is nothing more to adjust for each camera than the tone mapping, glare, and camera settings. The environment multiplier remains at 1.0 throughout all cameras, and the kernel exposure is set to 200 for the brighter areas and 250 for dimmer regions.

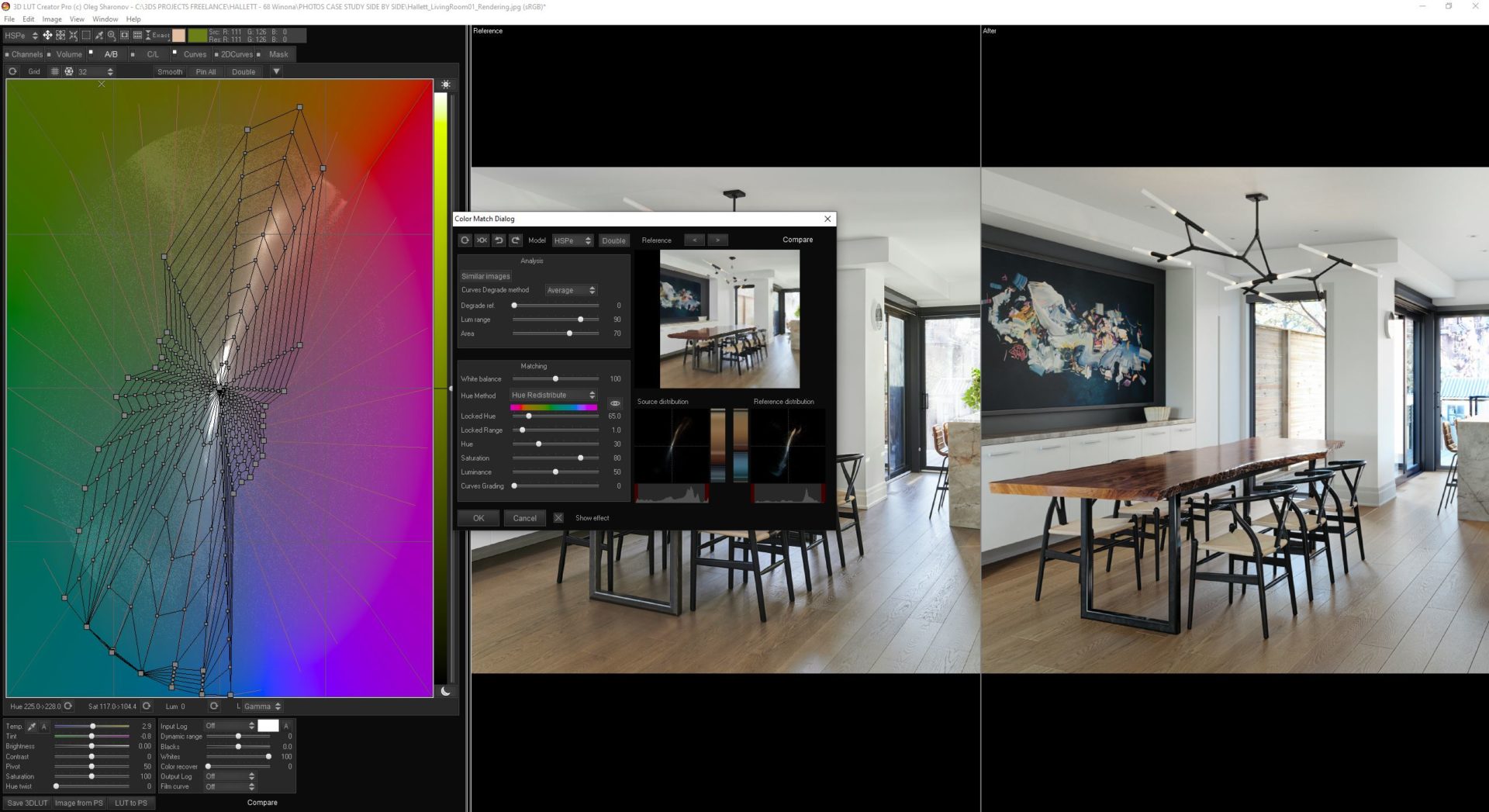

The camera exposure ranges from 400 to 1500 with gamma set to 1.0 Using a 32-bit draft render. I created a custom LUT using 3D LUT Creator to make the saturation and hues more aligned with the photographs.

There is a powerful “color match to reference” feature and many other grading tools not found in Photoshop.

I used the exported LUT from the color match in the enabled LUT slot under Tone Mapping in the Fstorm render settings. The average strength setting was 0.7 since it was combined with adjustments to each camera’s contrast and burn value settings.

The images below are pixelated, but upon click, it looks good!

GPU Rendering

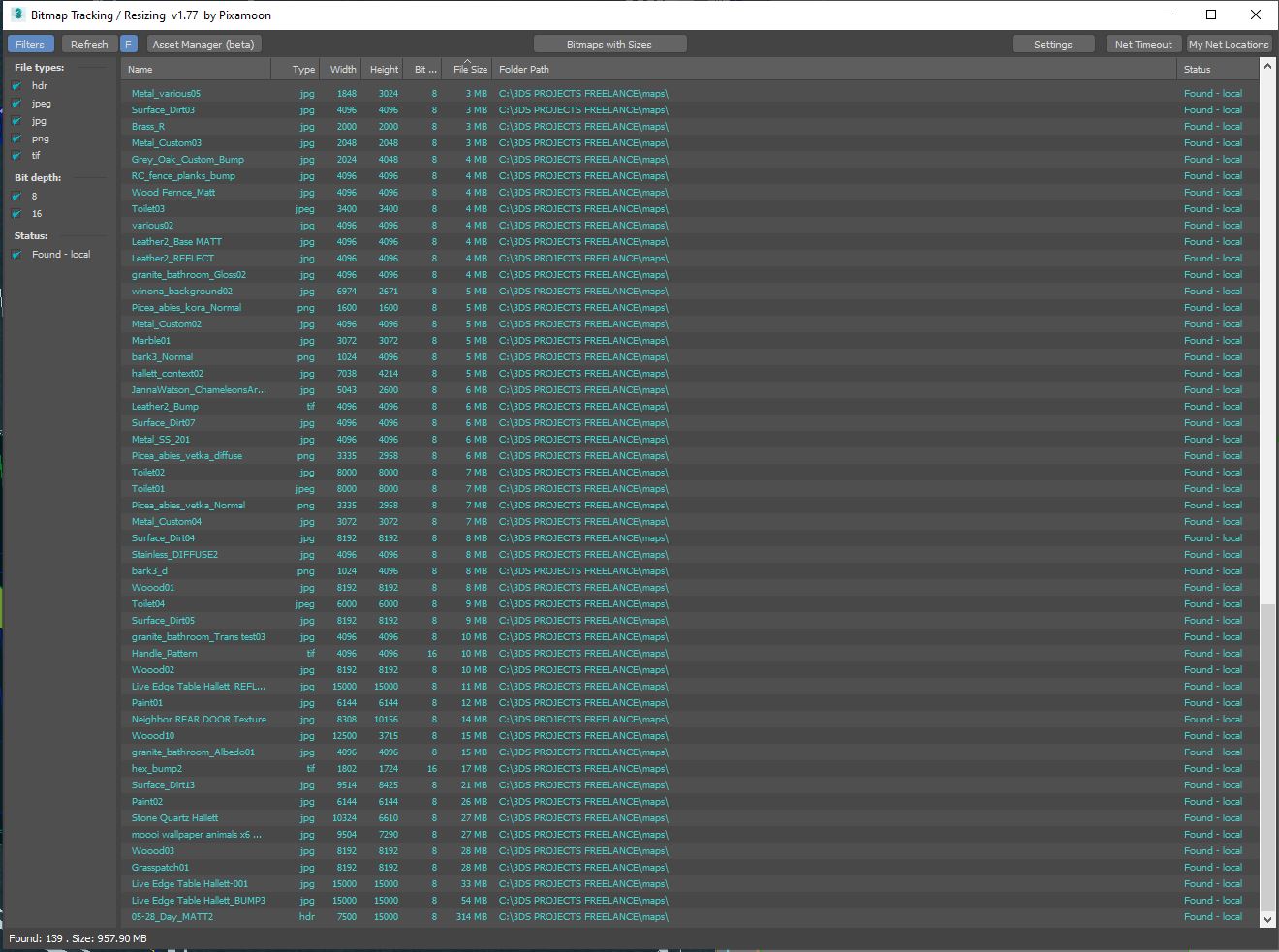

Fstorm is an unbiased GPU render engine. This means the entire scene must be contained within the available VRAM of the graphic engine. Proxies only reduce the 3ds max saved file. The polycount of those proxies will still be added to the GPU resources. The only way to fit your scene into your graphic cards VRAM is to 1) reduce poly count, 2) use instances as much as possible 3) reduce bitmap texture size.

To help with bitmap size management, I rely heavily on Pixamoon’s script, making generating various bitmap sizes and compression easy. If you run out of VRAM, reduce or convert to JPG, add a suffix such as _2K and save to the same directory. You can always load the high-resolution image if you ever notice you’ve degraded the bitmap to small.

Fstorm also has a built compression, which works on top of your compress bitmaps. Heavy JPG compression rarely makes a noticeable difference for diffuse and reflection maps. Fine detailed bump and displacement maps often require the full uncompressed TIFs, which you can always revert to since the script does not overwrite the original file.

Post Production

As you can see from the screenshot of the RT render window next to the photograph, there is almost no need for post-production. I made minor level adjustments and masking specific objects like the leather of the stool to match real-life more closely. And you may notice that the photographer removed the spotlights and outlets in the post, whereas I kept them in the renderings!

Final Comments

This project was a passion project for me, and matching a rendering to real life is something I’ve wanted to create and share since the beginning of my career. There are so many minor adjustments that went into this universal setup that I couldn’t possibly cover it all. If you want to dive deeper into this scene, visit my website.

Polytown Media LTD

© All Rights Reserved 2022